Providing guidelines for the

our philosophy on AI model evaluation

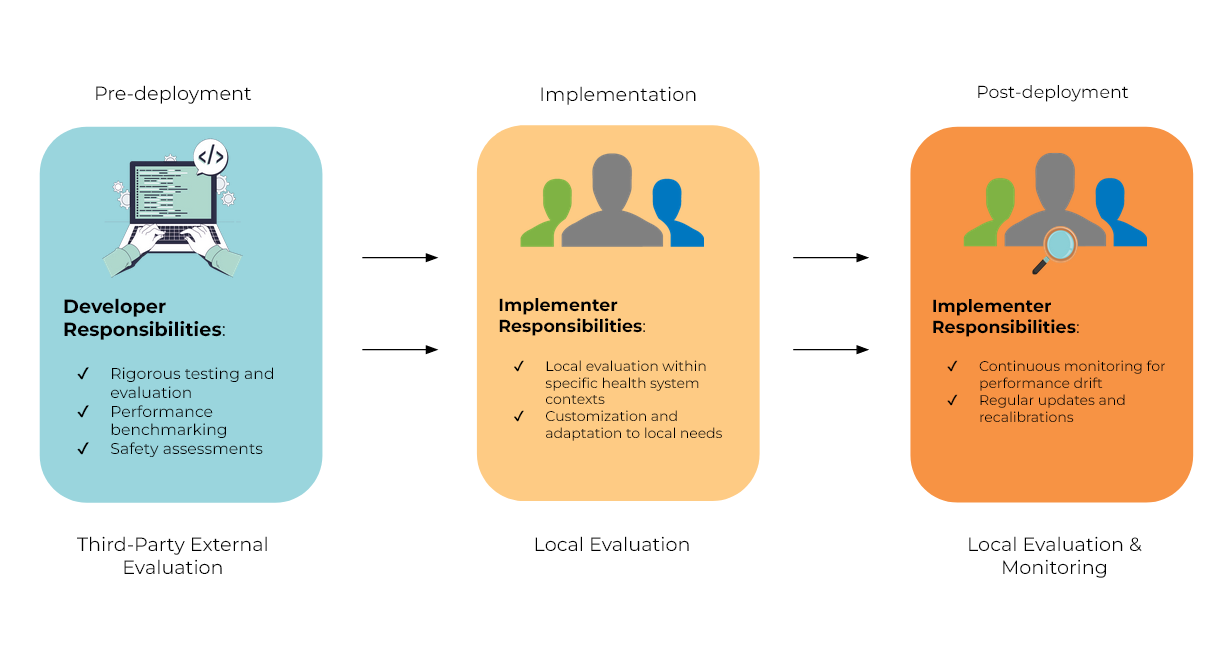

Developer’s Responsibility: Evaluate the AI model thoroughly before deployment to ensure it meets safety and performance standards.

End-User’s Responsibility: Conduct local evaluations to ensure the AI tool fits the specific needs and conditions of the health system.

End-User’s Monitoring Responsibility: Monitor AI tool performance over time, ensuring it remains effective and adapting to any changes in conditions.

AI That Serves All of Us

Our coalition intends to develop a framework with Health Equity in mind, aiming to address algorithmic bias.

We created the Coalition for Health AI (CHAI™) to welcome a diverse array of stakeholders to listen, learn, and collaborate to drive the development, evaluation, and appropriate use of AI in healthcare.

Founding Partners